[Avg. reading time: 4 minutes]

Volume

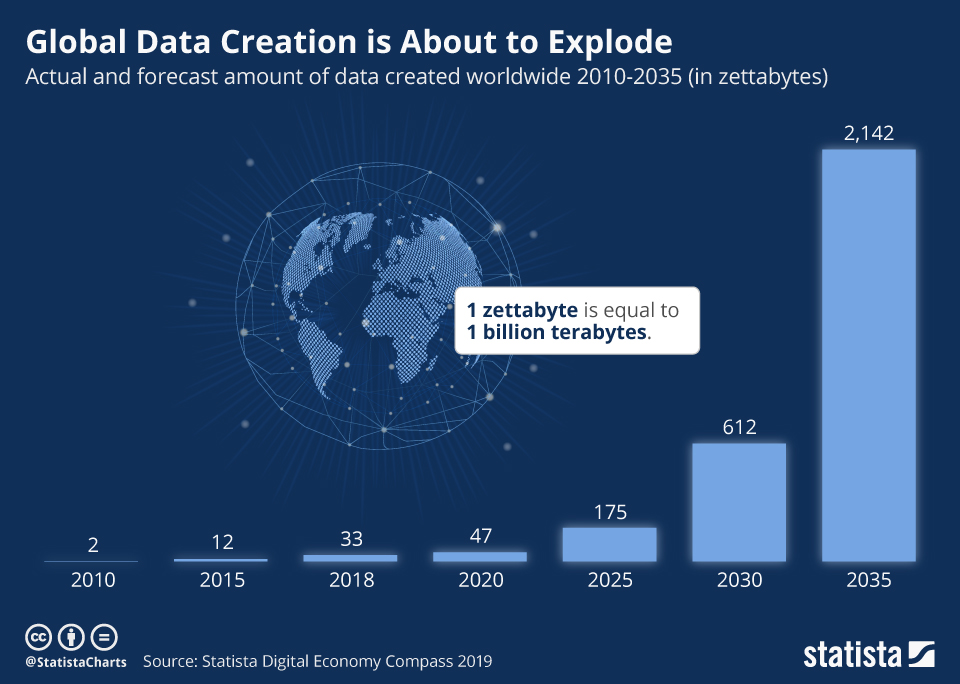

Volume refers to the sheer amount of data generated every second from various sources around the world. It’s one of the core characteristics that makes data big.With the rise of the internet, smartphones, IoT devices, social media, and digital services, the amount of data being produced has reached zettabyte and soon yottabyte scales.

- YouTube users upload 500+ hours of video every minute.

- Facebook generates 4 petabytes of data per day.

- A single connected car can produce 25 GB of data per hour.

- Enterprises generate terabytes to petabytes of log, transaction, and sensor data daily.

Why It Matters

With the rise of Artificial Intelligence (AI) and especially Large Language Models (LLMs) like ChatGPT, Bard, and Claude, the volume of data being generated, consumed, and required for training is skyrocketing.

-

LLMs Need Massive Training Data

-

LLMs generated content is exponential — blogs, reports, summaries, images, audio, and even code.

-

Storage systems must scale horizontally to handle petabytes or more.

-

Traditional databases can’t manage this scale efficiently.

-

Volume impacts data ingestion, processing speed, query performance, and cost.

-

It influences how data is partitioned, replicated, and compressed in distributed systems.